Hancheng Min

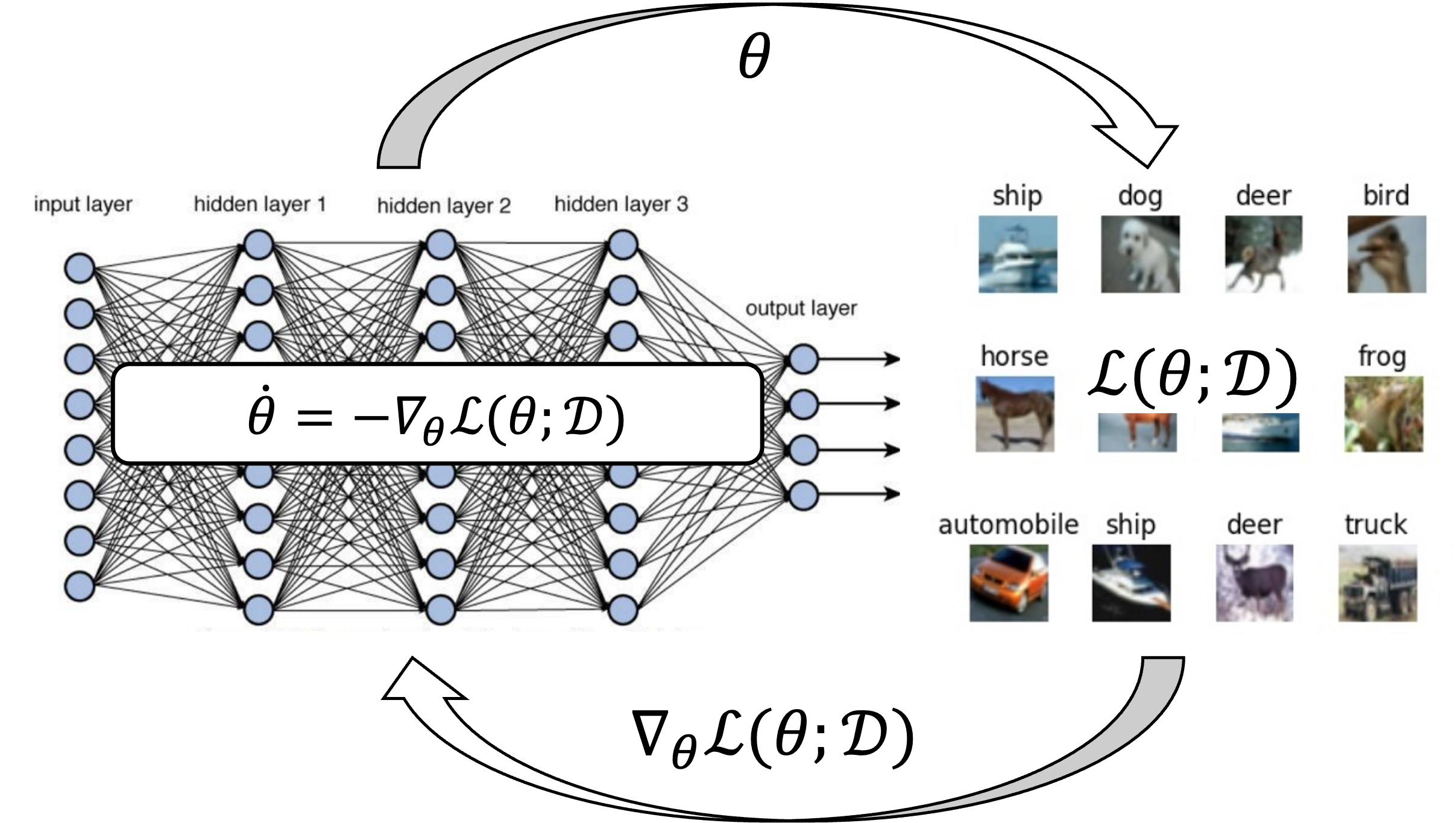

I am a Tenure-track Associate Professor at the Institute of Natural Sciences (INS) and the School of Mathematics (SMS), Shanghai Jiao Tong Univeristy. My research centers around building mathematical principles that facilitates the interplay between machine learning and dynamical systems. Recently, I am mainly interested in analyzing gradient-based optimization algorithms on overparametrized neural networks from a dynamical system perspective.

Recent Updates

[Feb, 11, 2026] Our tutorial paper On the Convergence, Implicit Bias and Edge of Stability of Gradient Descent in Deep Learning has been accepted to IEEE Signal Processing Magazine !

[Dec, 10, 2025] I gave a talk Understanding Incremental Learning with Closed-form Solution to Gradient Flow on Overparamerterized Matrix Factorization at CDC 2025 at Rio

[Nov, 23, 2025] I gave a talk Learning Dynamics in the Feature Learning Regime: Implicit Bias, Neural Collapse, and Robustness at NYU, Shanghai

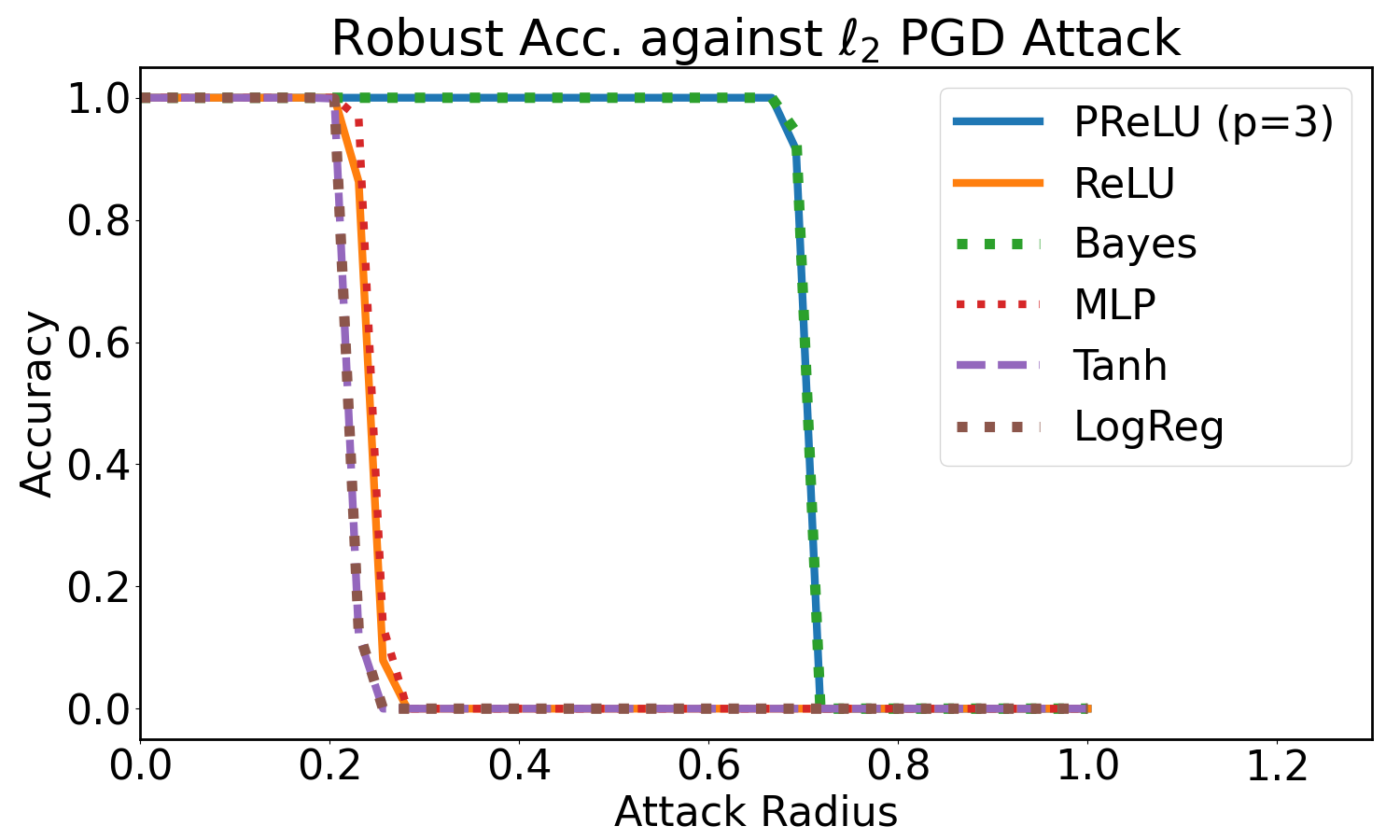

[Sep, 18, 2025] Our papers Neural Collapse under Gradient Flow on Shallow ReLU Networks for Orthogonally Separable Data and Convergence Rates for Gradient Descent on the Edge of Stability for Overparametrised Least Squares have been accepted to NeurIPS 2025 !

[Sep, 09, 2025] I will serve as an Area Chair for ICLR 2026 and AISTATS 2026

Recent Publications

- On the Convergence, Implicit Bias and Edge of Stability of Gradient Descent in Deep LearningIEEE Signal Processing Magazine (IEEE SPM), Mar 2026To appear